Shanghai Jiao Tong University

Shanghai Jiao Tong UniversityB.E. in Computer Science, Shanghai Jiao Tong University(2022)

Hello! I'm Ruihan Guo (郭睿涵), a research scientist at Helixon, specializing in antibody modeling and design.

Prior to joining Helixon, I completed my undergraduate studies at the ACM Honors Class, Shanghai Jiao Tong University (SJTU) in June 2022, advised by Prof. Yong Yu.

My research interests focus on protein structure prediction, molecular design, and the applications of machine learning and reinforcement learning in science. Currently, I am working on motif-controlled generation and interpretable protein design.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Shanghai Jiao Tong UniversityB.E. in Computer ScienceSept. 2018 - Jul. 2022

Shanghai Jiao Tong UniversityB.E. in Computer ScienceSept. 2018 - Jul. 2022

Experience

-

Shanghai Jiao Tong UniversityAEPX lab, Research InternJul. 2020 - Apr. 2021

Shanghai Jiao Tong UniversityAEPX lab, Research InternJul. 2020 - Apr. 2021 -

University of Illinois Urbana-ChampaignResearch InternSept. 2021 - Aug. 2022

University of Illinois Urbana-ChampaignResearch InternSept. 2021 - Aug. 2022 -

Helixon Inc.Research ScientistSept. 2022 - Present

Helixon Inc.Research ScientistSept. 2022 - Present

Honors & Awards

-

Gold Medal, ACM-ICPC Asia-East Continent FinalNov. 2018

-

Champion, ACM-ICPC Asia Regional Contest, Hong Kong SiteNov. 2018

-

Gold Medal, ACM-ICPC Asia Regional Contest, Nanjing SiteOct. 2018

-

Gold Medal, China Collegiate Programming Contest, Qinhuangdao SiteSept. 2018

Selected Publications (view all )

All-Atom Peptide Design by Mimicking Binding Interface

Xiangzhe Kong, Rui Jiao, Haowei Lin, Ruihan Guo, Wenbing Huang, Wei-Ying Ma, Zihua Wang, Yang Liu, Jianzhu Ma

Submitted to Nature Methods, under review. 2024

PepMimic is an machine learning model for designing peptide drug candidates by mimicking binding interfaces, achieving dissociation constants as low as $10^{-9}$M with a success rate 20,000 times higher than random screening, and demonstrating therapeutic potential through extensive cellular and in vivo validations.

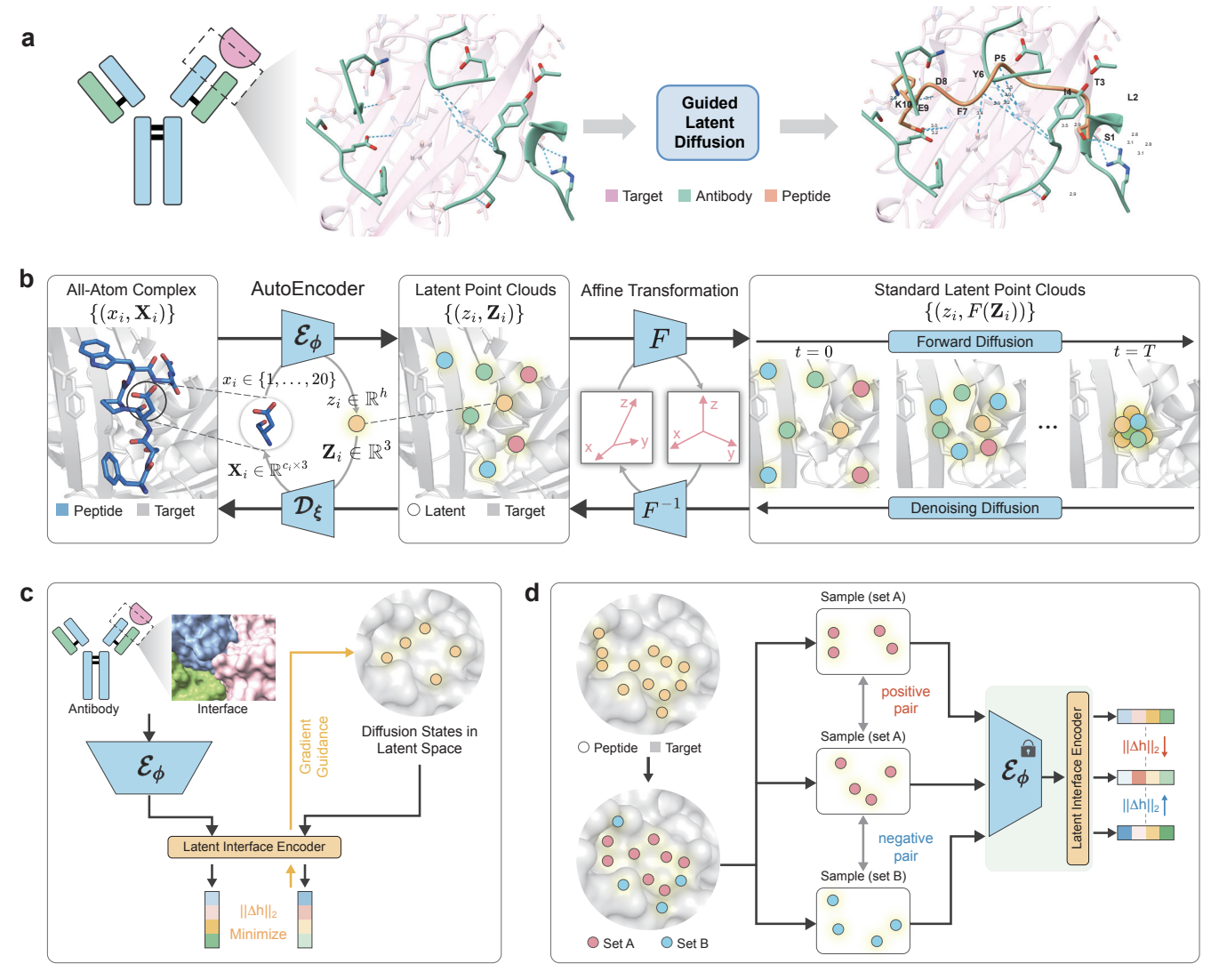

All-Atom Peptide Design by Mimicking Binding Interface

Xiangzhe Kong, Rui Jiao, Haowei Lin, Ruihan Guo, Wenbing Huang, Wei-Ying Ma, Zihua Wang, Yang Liu, Jianzhu Ma

Submitted to Nature Methods, under review. 2024

PepMimic is an machine learning model for designing peptide drug candidates by mimicking binding interfaces, achieving dissociation constants as low as $10^{-9}$M with a success rate 20,000 times higher than random screening, and demonstrating therapeutic potential through extensive cellular and in vivo validations.

Enhancing Protein Mutation Effect Prediction through a Retrieval-Augmented Framework

Ruihan Guo*, Rui Wang*, Ruidong Wu*, Zhizhou Ren, Jiahan Li, Shitong Luo, Zuofan Wu, Qiang Liu, Jian Peng, Jianzhu Ma (* equal contribution)

NeurIPS 2024

This work introduces a retrieval-augmented framework that incorporates similar local structural motifs from a pre-trained protein structure encoder, achieving state-of-the-art performance in protein mutation effect prediction and providing a scalable solution for studying mutation impacts.

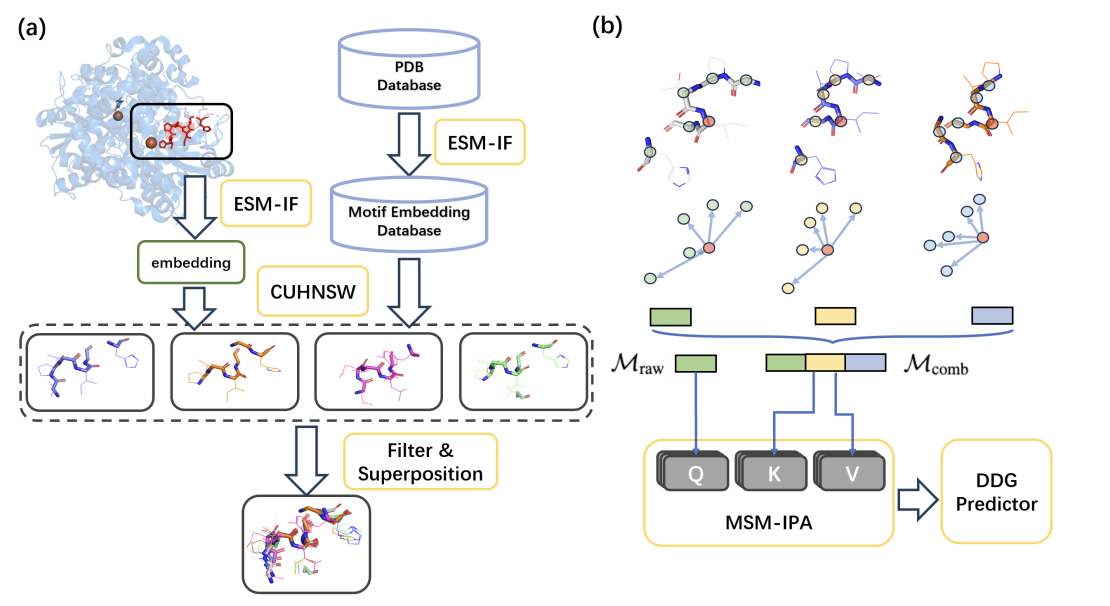

Enhancing Protein Mutation Effect Prediction through a Retrieval-Augmented Framework

Ruihan Guo*, Rui Wang*, Ruidong Wu*, Zhizhou Ren, Jiahan Li, Shitong Luo, Zuofan Wu, Qiang Liu, Jian Peng, Jianzhu Ma (* equal contribution)

NeurIPS 2024

This work introduces a retrieval-augmented framework that incorporates similar local structural motifs from a pre-trained protein structure encoder, achieving state-of-the-art performance in protein mutation effect prediction and providing a scalable solution for studying mutation impacts.

Decipher Fundamental Atomic Interactions to Unify Generative Molecular Docking and Design

Xingang Peng, Ruihan Guo, Yan Xu, Jiaqi Guan, Yinjun Jia, Yanwen Huang, Muhan Zhang, Jian Peng, Jiayu Sun, Chuanhui Han, Zihua Wang, Jianzhu Ma

Submitted to Cell, under review. 2024

PocketXMol is an all-atom model that unifies diverse molecular tasks under a single framework without fine-tuning, excelling in small molecule and peptide design, with demonstrated success in creating caspase-9 inhibitors and PD-L1-binding peptides validated in cellular and animal models.

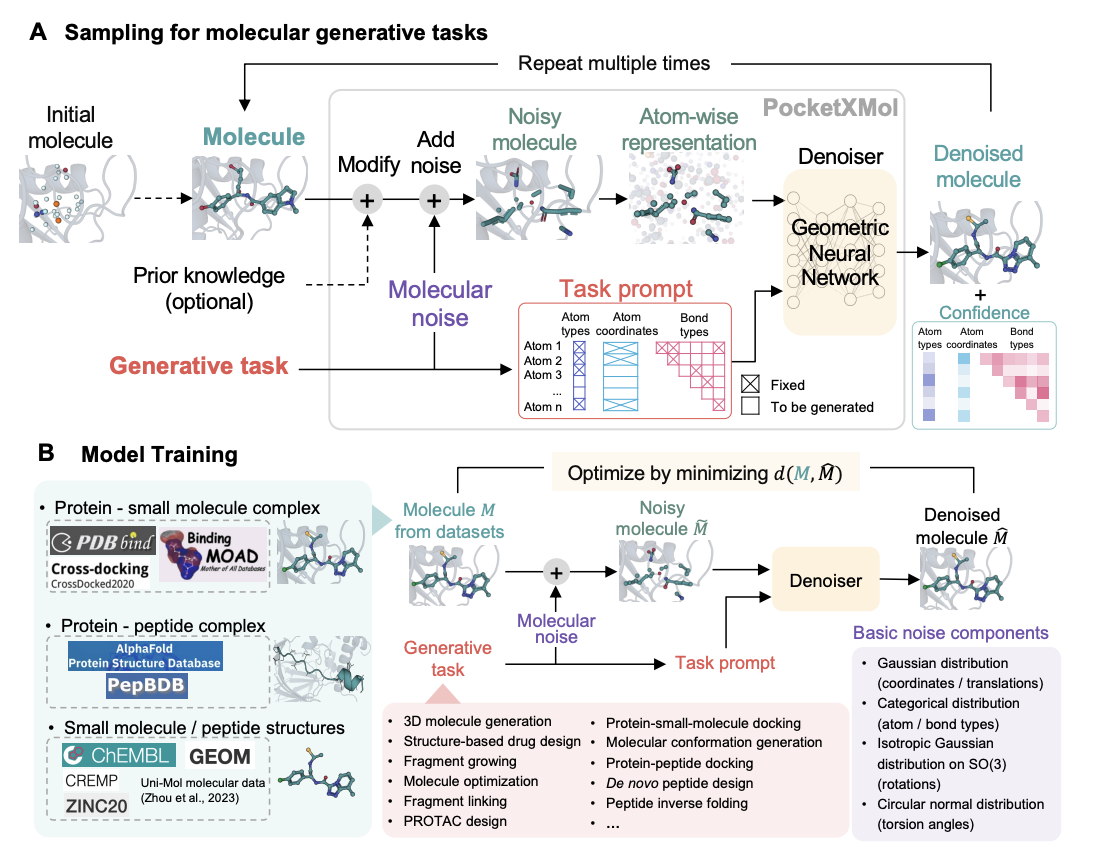

Decipher Fundamental Atomic Interactions to Unify Generative Molecular Docking and Design

Xingang Peng, Ruihan Guo, Yan Xu, Jiaqi Guan, Yinjun Jia, Yanwen Huang, Muhan Zhang, Jian Peng, Jiayu Sun, Chuanhui Han, Zihua Wang, Jianzhu Ma

Submitted to Cell, under review. 2024

PocketXMol is an all-atom model that unifies diverse molecular tasks under a single framework without fine-tuning, excelling in small molecule and peptide design, with demonstrated success in creating caspase-9 inhibitors and PD-L1-binding peptides validated in cellular and animal models.

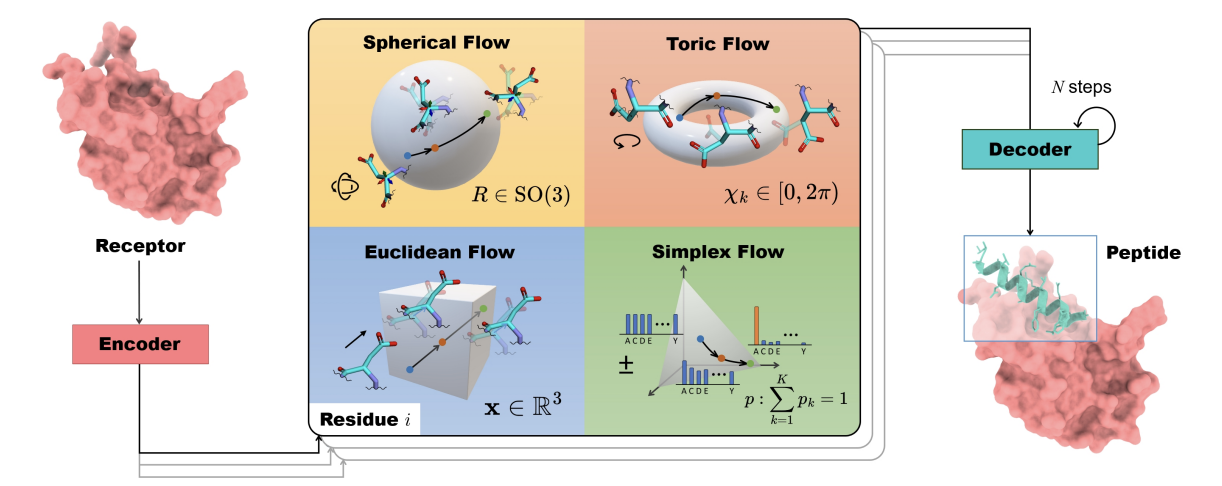

Full-Atom Peptide Design based on Multi-modal Flow Matching

Jiahan Li*, Chaoran Cheng*, Zuofan Wu, Ruihan Guo, Shitong Luo, Zhizhou Ren, Jian Peng, Jianzhu Ma (* equal contribution)

ICML 2024

PepFlow introduces a multimodal deep generative model based on the flow-matching framework for full-atom peptide design, leveraging SE(3) manifolds and high-dimensional tori to model backbone orientations and side-chain dynamics, achieving state-of-the-art performance across peptide design tasks.

Full-Atom Peptide Design based on Multi-modal Flow Matching

Jiahan Li*, Chaoran Cheng*, Zuofan Wu, Ruihan Guo, Shitong Luo, Zhizhou Ren, Jian Peng, Jianzhu Ma (* equal contribution)

ICML 2024

PepFlow introduces a multimodal deep generative model based on the flow-matching framework for full-atom peptide design, leveraging SE(3) manifolds and high-dimensional tori to model backbone orientations and side-chain dynamics, achieving state-of-the-art performance across peptide design tasks.

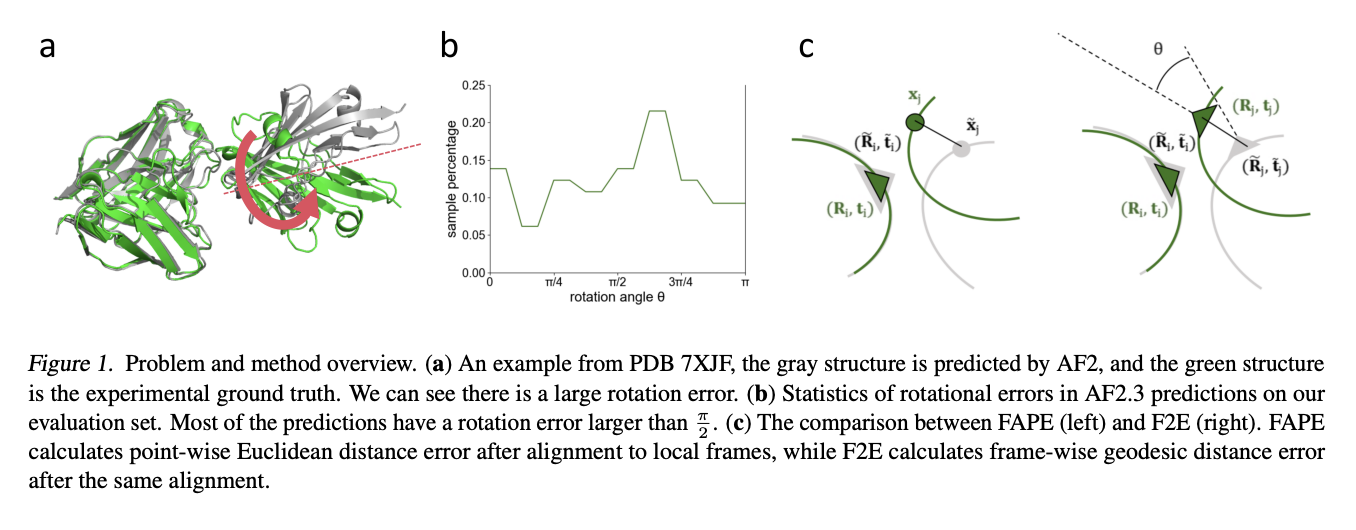

FAFE: Immune Complex Modeling with Geodesic Distance Loss on Noisy Group Frames

Ruidong Wu*, Ruihan Guo*, Rui Wang*, Shitong Luo, Yue Xu, Jiahan Li, Jianzhu Ma, Qiang Liu, Yunan Luo, Jian Peng (* equal contribution)

ICML 2024 Spotlight

This work introduces Frame Aligned Frame Error (FAFE), a novel geodesic loss that overcomes AlphaFold2's gradient vanishing issue in high-rotational-error targets, enabling more accurate antibody-antigen complex modeling and achieving up to a 182% improvement in correct docking rates.

FAFE: Immune Complex Modeling with Geodesic Distance Loss on Noisy Group Frames

Ruidong Wu*, Ruihan Guo*, Rui Wang*, Shitong Luo, Yue Xu, Jiahan Li, Jianzhu Ma, Qiang Liu, Yunan Luo, Jian Peng (* equal contribution)

ICML 2024 Spotlight

This work introduces Frame Aligned Frame Error (FAFE), a novel geodesic loss that overcomes AlphaFold2's gradient vanishing issue in high-rotational-error targets, enabling more accurate antibody-antigen complex modeling and achieving up to a 182% improvement in correct docking rates.

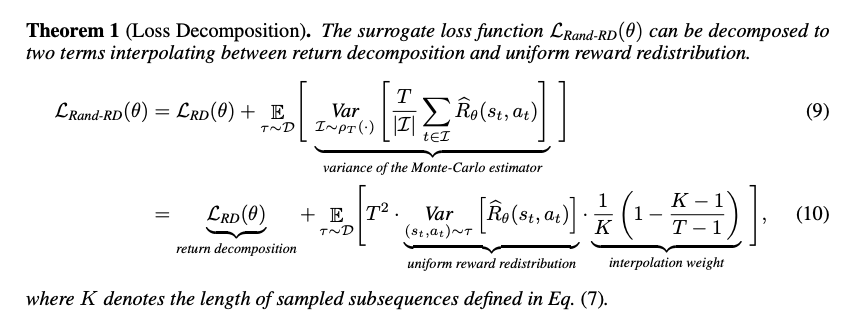

Learning Long-Term Reward Redistribution via Randomized Return Decomposition

Zhizhou Ren, Ruihan Guo, Yuan Zhou, Jian Peng

ICLR 2022 Spotlight

This work addresses episodic reinforcement learning with trajectory feedback by introducing Randomized Return Decomposition (RRD), a reward redistribution algorithm that uses Monte-Carlo sampling to scale least-squares-based proxy reward learning for long-horizon tasks, achieving significant improvements over baseline methods.

Learning Long-Term Reward Redistribution via Randomized Return Decomposition

Zhizhou Ren, Ruihan Guo, Yuan Zhou, Jian Peng

ICLR 2022 Spotlight

This work addresses episodic reinforcement learning with trajectory feedback by introducing Randomized Return Decomposition (RRD), a reward redistribution algorithm that uses Monte-Carlo sampling to scale least-squares-based proxy reward learning for long-horizon tasks, achieving significant improvements over baseline methods.